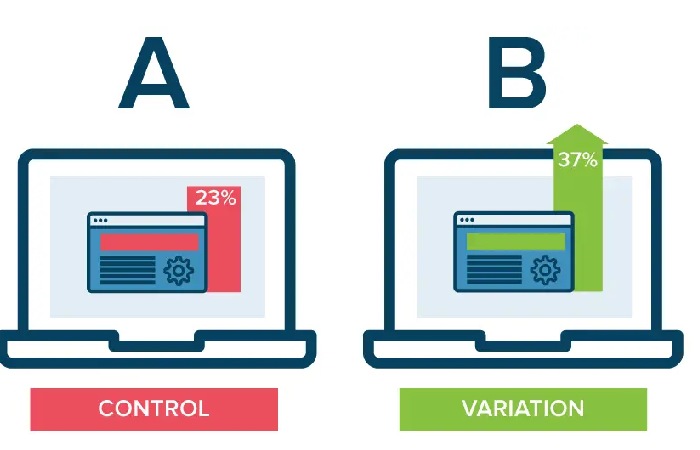

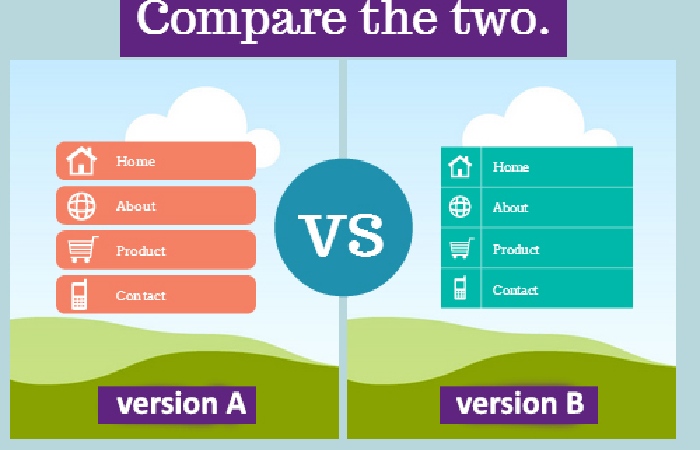

A/B testing is a method of market research that consists of comparing the control (A) version of the product with its modified (B) version. It allows you to determine the extent and nature of the impact of the changes made on the targets.

This method is often used in website and mobile app development to evaluate improvements that can increase traffic and conversion rates, reduce bounce rates, increase session duration, etc. It’s used by product and data analysts, web developers, internet marketers and other professionals for any business from a global marketplace to a Hellspin login website. With A/B testing, you can build and test hypotheses to choose which changes you need to make to your product to improve efficiency.

What Is Testing for?

Many professionals make changes to a product based on their intuition, personal experience, etc. This leads to a percentage of decisions that don’t match objective reality and are ineffective.

In developing and testing hypotheses, A/B testing allows you to solve the following tasks:

- Understand real needs, habits, user behavior, and objective factors that influence them.

- Reduce the risks associated with the influence of the subjective perception of the developer on the decisions made.

- Allocate resources to implement effective solutions.

In web development, the following elements are tested using this technique:

- Text content (content, structure, volume, font).

- Design, size, location of conversion buttons and forms.

- The logo and other corporate identity elements.

- Design and layout of the web page.

- Product price, various discounts and promotions.

- Unique selling proposition.

- Product images and other visual materials.

Types of A/B Testing

Simple A/B Tests

This is a classical method in which two versions (control and test) of the object under study are compared and only one parameter is different. For example, a landing page with a blue and yellow call to action button. This type of test is effective in the case of point changes that don’t globally affect the site’s performance.

A/B/n Tests

One or more variants on the source page are run simultaneously. Conversion rates among variants based on a single change are compared to get a result. For example, landing pages with rectangular, round, triangular and trapezoidal action buttons. A/B/n-testing allows you to choose an appropriate solution from several options.

How to Do the Test

Let’s consider the A/B testing on the example of the classic split test, because the other two varieties are executed in general by the same algorithm of actions. The only difference is the number of tested variants. A split test is an experimental and statistical method of research, so its effectiveness directly depends on compliance with a number of strict rules.

Objectives and Metrics

Before A/B testing, you need to understand the desired result clearly. It can be an increase in traffic or conversion, a decrease in bounce rates, etc. Depending on the goal, metrics are chosen – quantitative indicators that determine the effectiveness of the changes made. For example, the number of orders, the average check, and the percentage of open emails in the mailing list.

Hypothesis

The results of A/B testing directly depend on the hypothesis to be tested. The hypothesis must contain the object to be analyzed, its changes, and expected results. The general hypothesis is divided into 2 hypotheses:

- The null hypothesis – the changes made will give no result, and all deviations are due to chance.

- The alternative hypothesis – option B will show a statistically significant result.

The Number of Tested Variables

Each website or mobile app contains dozens of elements influencing the user experience, traffic, conversion rates and other target indicators. Testing a few or all at once isn’t the best option, as after testing it will be difficult to determine which variable had a decisive impact on the result. Therefore, for a single split test, you need to select only one element to be tested (and there can be more than two variations).

Audience Distribution

For the purity of the split test, the traffic should be distributed randomly and equally between the control and the version being tested. Users who see version A should not see version B. Suppose a product is already in use and some improvements are made to it (for example, there is a parallel advertising campaign). In that case, the traffic should be divided into 3 groups instead of 2 for reliable results. The first two are shown the control version of the product, and the third group is shown the product under test. If the results in the first two groups are the same. External factors have not affected the validity of the test and the result can be trusted.

Analyzing Results

At the end of A/B testing, you need to compare the results of groups A and B with each other and the resulting difference with the threshold of significance. You also need to assess how the metric’s value changed in option B – positive and negative. This will allow us to understand the reasonableness of the changes.

If the split test showed no significant difference between the overall metrics A and B, you can try segmenting them. For example, changing the subscription button from rectangular to round didn’t increase orders. However, it improved the return on orders for the female portion of the audience. Such segmentation can be done by geography, platform type, gender, age, traffic source, etc.

Not always a small difference between the target figures in the control and experimental groups means no result. They need to be correlated with the degree of change made.

If the testing was carried out correctly and showed a statistically significant result, and the changes made justified themselves, that’s not a reason to stop. Moreover, first, on the same strip, except for the button targeted action, there is a header, the main text, images and photos, a widget that measures the duration of the action, and many other elements. All of them influence user behavior. Secondly, conducting several iterations of a test for the same element is recommended, with each new control making an experimental version from the previous test.